Slide 1

Slide 2

Web standards don't exist. At least, they don't physically exist. They are intangible. They're in good company.

Slide 3

Feelings are intangible, but real. Hope. Despair.

Slide 4

Ideas are intangible: liberty, justice, socialism, capitalism.

Slide 5

The economy. Currency. All intangible. I'm sure we've all had those "college thoughts": > Money isn't real, man! They're just bits of metal and pieces of paper ! Wake up, sheeple!

Slide 6

Nations are intangible. Geographically, France is a tangible, physical place. But France, the Republic, is an idea. Geographically, North America is a real, tangible, physical land mass. But ideas like "Canada" and "The United States" only exist in our minds.

Slide 7

Faith—the feeling—is intangible. God—the idea—is intangible. Art—the concept—is intangible.

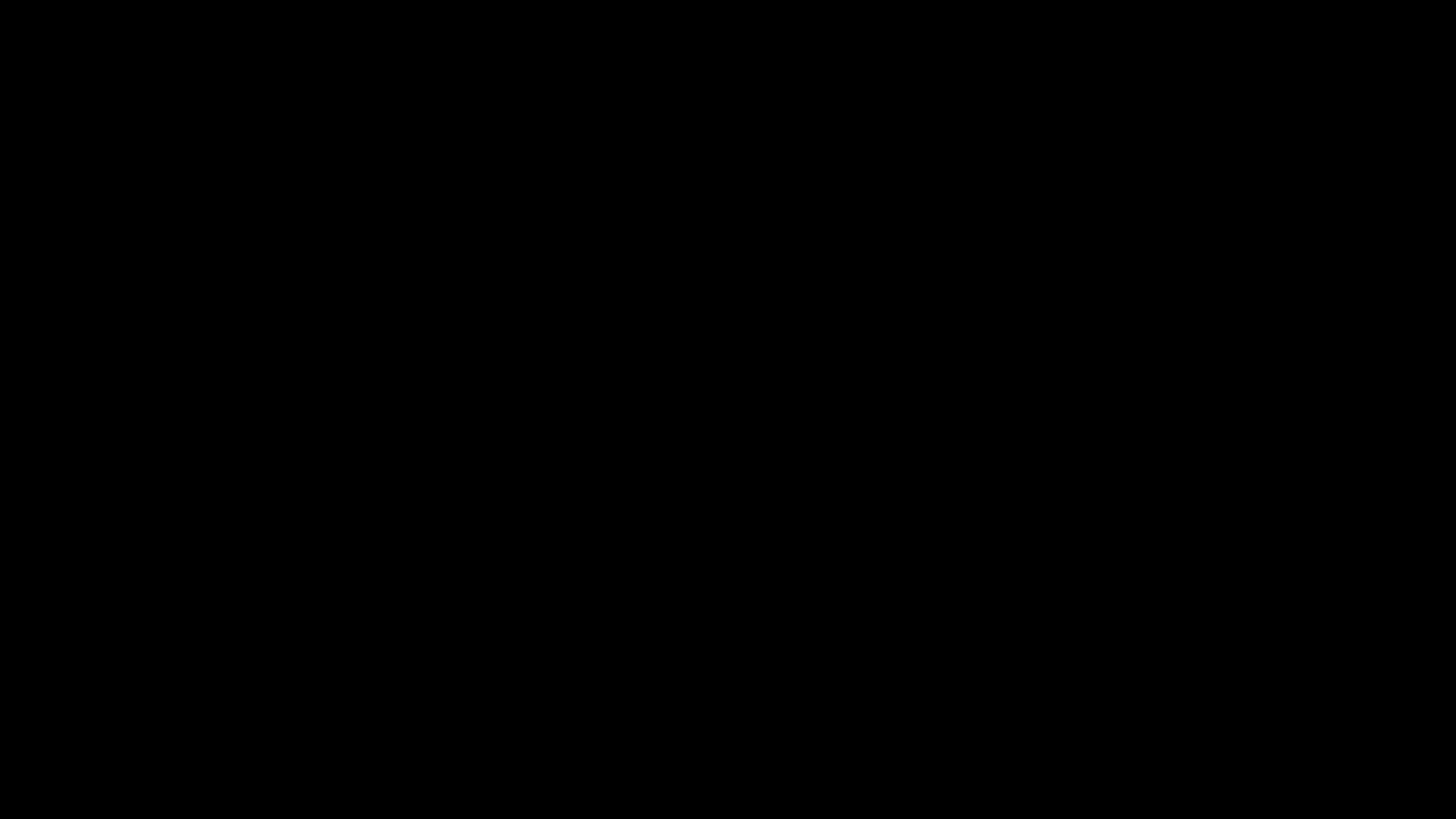

Slide 8

A piece of art is an insantiation of the intangible concept of what art is. Incidentally, I quite like Brian Eno's working definition of what art is. Art is anything we don't have to do. We don't have to make paintings, or sculptures, or films, or music. We have to clothe ourselves for practical reasons, but we don't have to make clothes beautiful. We have to prepare food to eat it, but don't have to make it a joyous event. By this definition, sports are also art.

Slide 9

We don't have to play football. Sports are also intangible. A game of football is an instantiation of the intangible idea of what football is. Football, chess, rugby, quiditch and rollerball are equally (in)tangible. But football, chess and rugby have more consensus. (Christianity, Islam, Judaism, and The Force are equally intangible, but Christianity, Islam, and Judaism have a bit more consensus than The Force).

Slide 10

HTML is intangible. A web page is an instantiation of the intangible idea of what HTML is. But we can document our shared consensus. A rule book for football is like a web standard specification. A documentation of consensus.

Slide 11

By the way, economics, religions, sports and laws are all examples of intangibles that can't be proven, because they all rely on their own internal logic—there is no outside data that can prove football or Hinduism or capitalism to be "true".

Slide 12

That's very different to ideas like gravity, evolution, relativity, or germ theory—they are all intangible but provable. They are discovered, rather than created. They are part of objective reality. Consensus reality is the collection of intangibles that we collectively agree to be true: economy, religion, law, web standards.

Slide 13

We treat consensus reality much the same as we treat objective reality: in our minds, football, capitalism, and Christianity are just as real as buildings, trees, and stars. Sometimes consensus reality and objective reality get into fights.

Slide 14

Some people have tried to make a consensus reality around the accuracy of astrology or the efficacy of homeopathy, or ideas like the Earth being flat, 9-11 being an inside job, the moon landings being faked, the holocaust never having happened, or vaccines causing autism. These people are unfazed by objective reality, which disproves each one of these ideas.

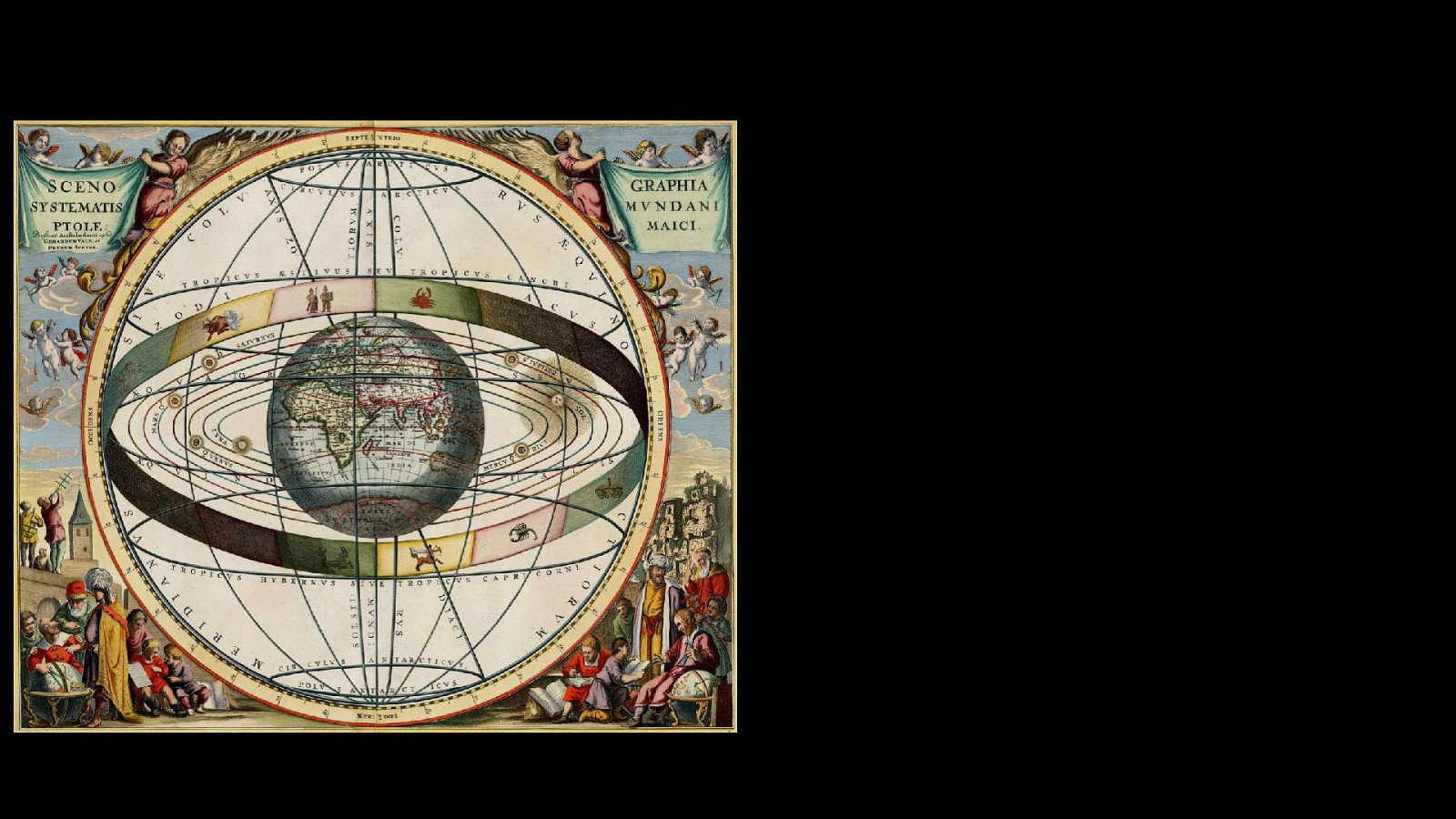

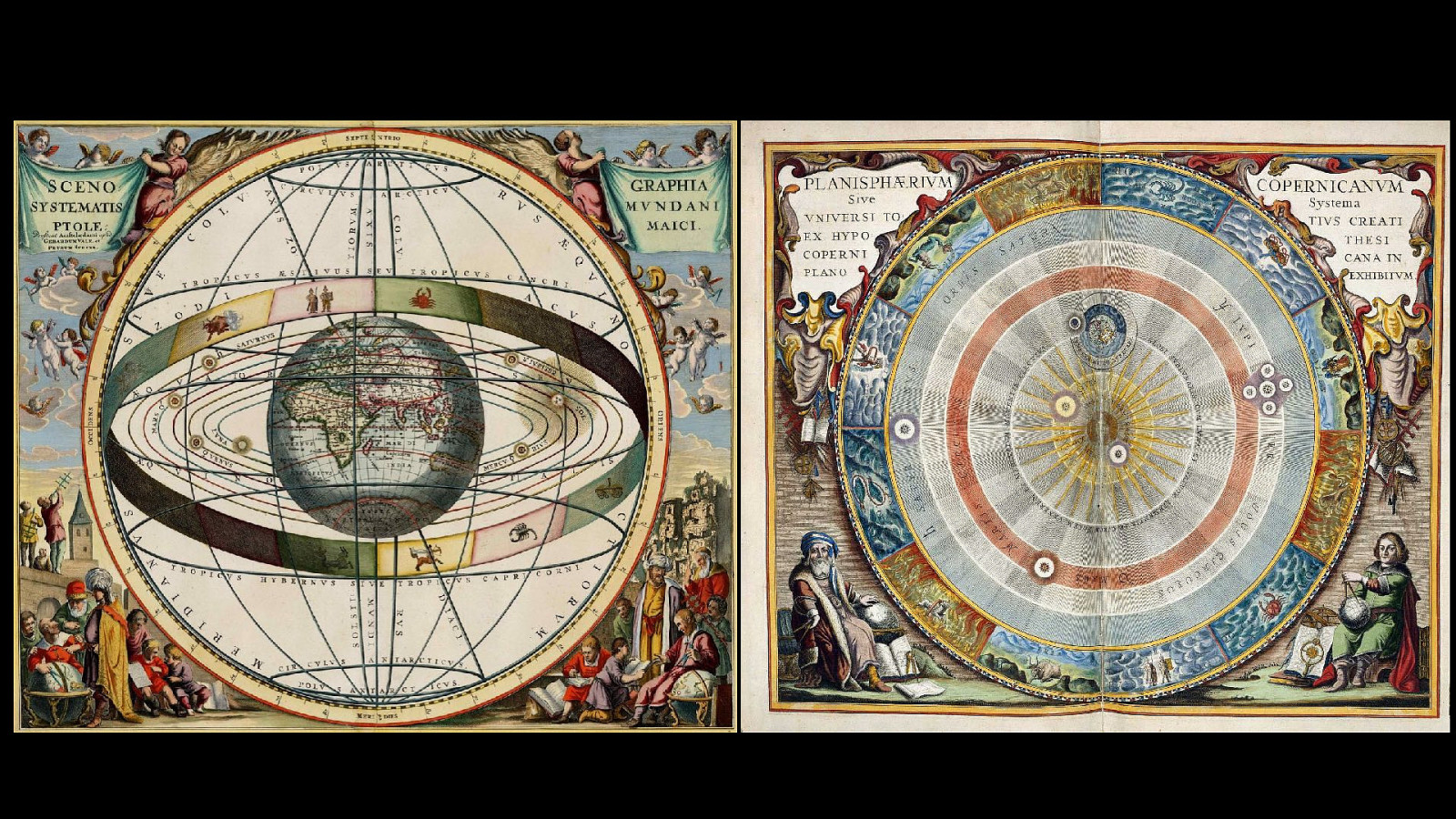

Slide 15

For a long time, the consensus reality was that the sun revolved around the Earth.

Slide 16

Copernicus and Galileo demonstrated that the objective reality was that the Earth (and all the other planets in our solar system) revolve around the sun. After the dust settled on that particular punch-up, we switched up our consensus reality. We changed the story.

Slide 17

That's another way of thinking about consensus reality: our currencies, our religions, our sports and our laws are stories that we collectively choose to believe. Web standards are a collection of intangibles that we collectively agree to be true. They're our stories. They're our collective consensus reality They are what web browsers agree to implement, and what we agree to use.

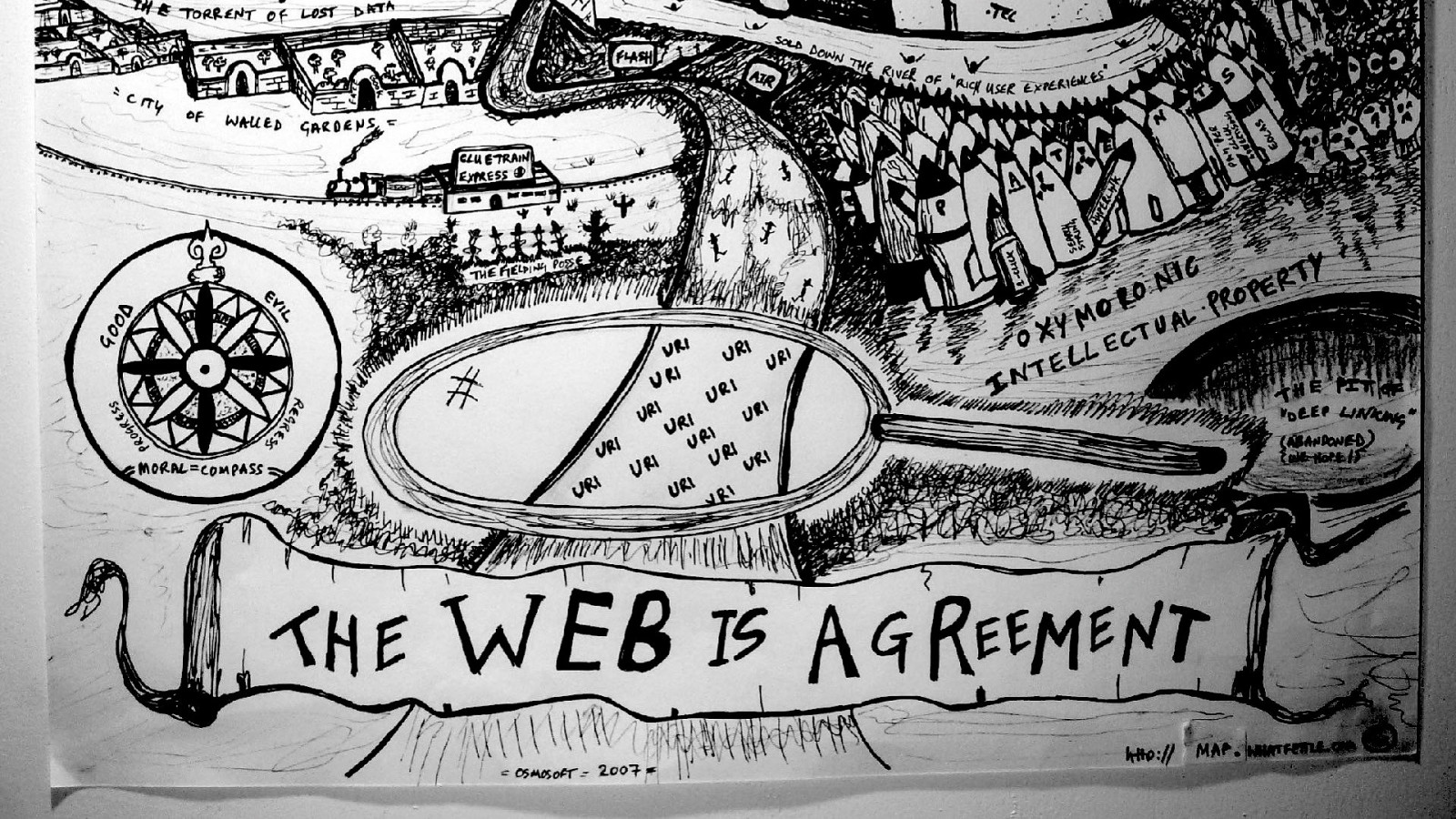

Slide 18

The web is agreement.

Slide 19

For human beings to collaborate together...

Slide 20

...they need a shared purpose. They must have a shared consensus reality—a shared story.

Slide 21

Once a group of people share a purpose, they can work together to establish principles. Design principles are points of agreement. There are design principles underlying every human endeavour. Sometimes they are tacit. Sometimes they are written down.

Slide 22

Patterns emerge from principles. Here's an example of a human endeavour: the creation of a nation state, like the United States of America. 1. The purpose is agreed in the declaration of independence. 2. The principles are documented in the constitution. 3. The patterns emerge in the form of laws

Slide 23

HTML elements, CSS features, and JavaScript APIs are all patterns (that we agree upon). Those patterns are informed by design principles.

Slide 24

I've been collecting design principles of varying quality at principles.adactio.com Here's one of the design principles behind HTML5. It's my personal favourite—

Slide 25

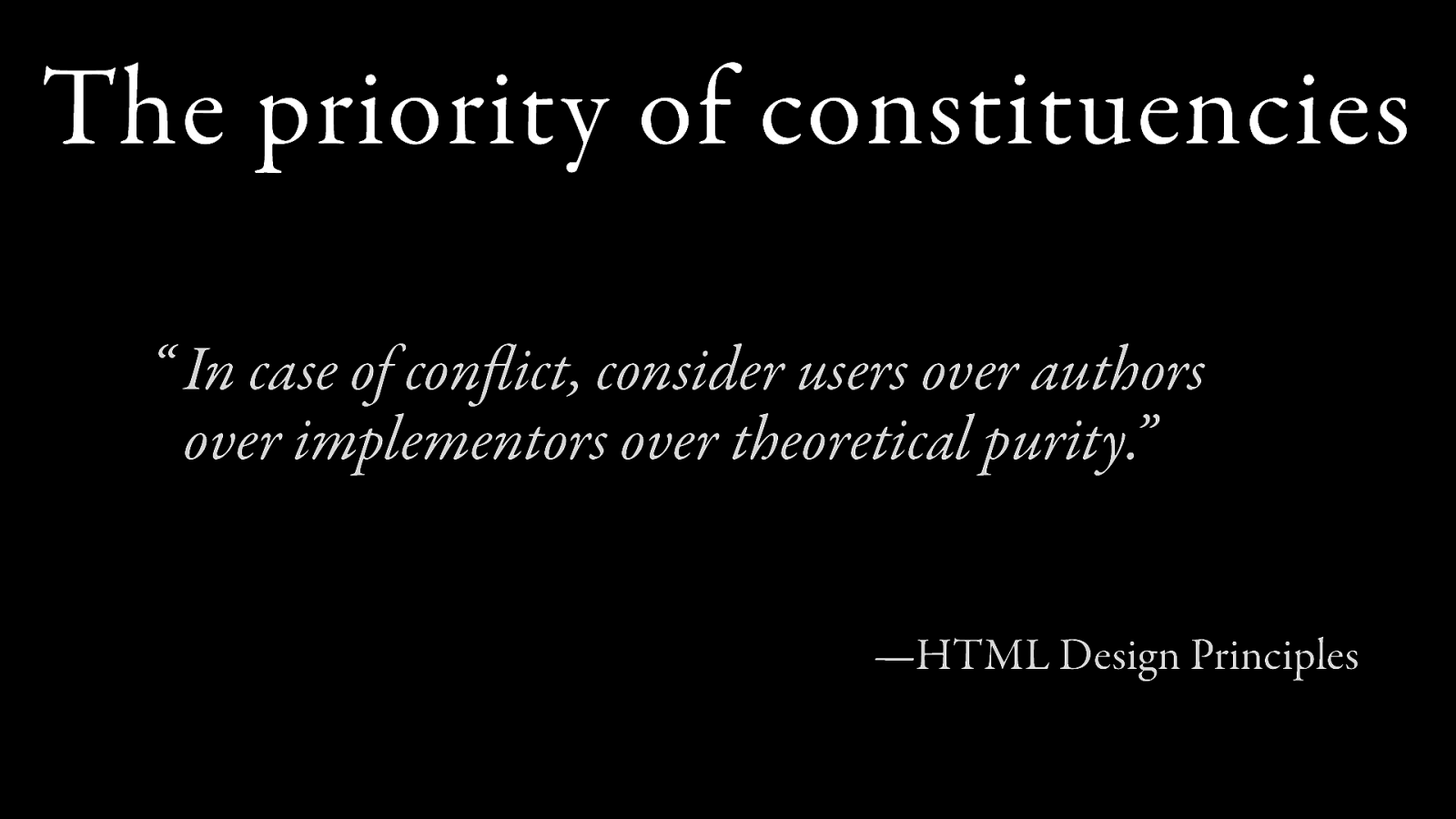

The priority of constituencies “In case of conflict, consider users over authors over implementors over theoretical purity.” —HTML Design Principles

"In case of conflict"—that's exactly what a good design principle does! It establishes the boundaries of agreement. If you disagree with the design principles of a project, there probably isn't much point contributing to that project. Also, it's reversible. You could imagine a different project that favoured theoretical purity above all else. In fact, that's pretty much what XHTML 2 was all about.

Slide 26

XHTML 1 was simply HTML reformulated with the syntax of XML: lowercase tags, lowercase attributes, always quoting attribute values.

Slide 27

Remember HTML doesn't care whether tags and attributes are uppercase or lowercase, or whether you put quotes around your attribute values. You can even leave out some closing tags. So XHTML 1 was actually kind of a nice bit of agreement: professional web developers agreed on using lowercase tags and attributes, and we agreed to quote our attributes. Browsers didn't care one way or the other.

Slide 28

But XHTML 2 was going to take the error-handling model of XML and apply it to HTML. This is the error handling model of XML:

Slide 29

If the parser encounters a single error, don't render the document. Of course nobody agreed to this. Browsers didn't agree to implement XHTML 2. Developers didn't agree to use it. It ceased to exist.

Slide 30

It turns out that creating a format is relatively straightforward. But how do you turn something into a standard? The really hard part is getting agreement.

Slide 31

Sturgeon’s Law states “90% of everything is crap.”

Coincidentally, 90% is also the percentage of the world's crap that gets transported by ocean.

Slide 32

Your clothes, your food, your furniture, your electronics ...chances are that at some point they were transported within an intermodal container. These shipping containers are probably the most visible—and certainly one of the most important—standards in the physical world. Before the use of intermodal containers, loading and unloading cargo from ships was a long, laborious, and dangerous task.

Slide 33

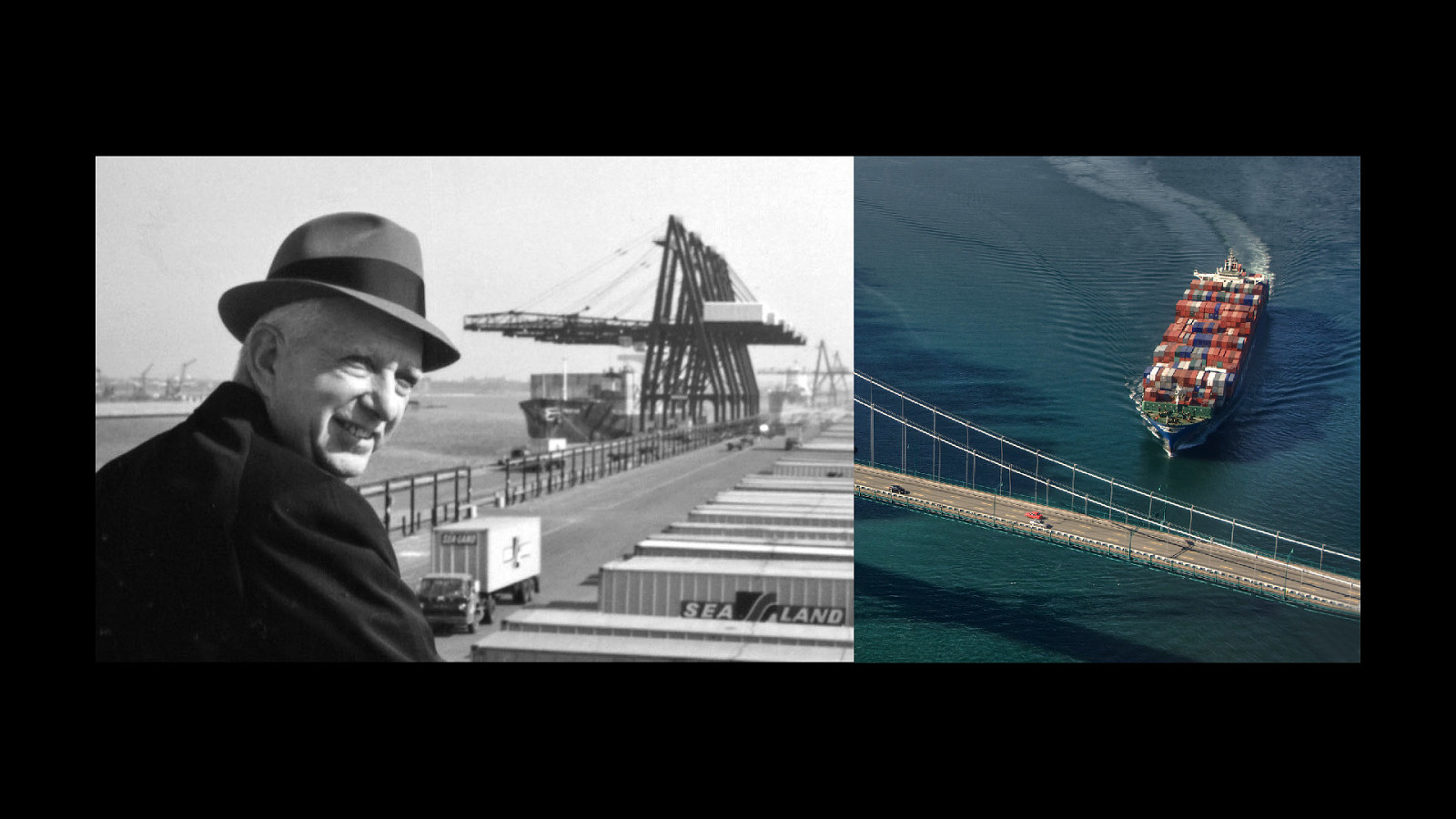

Along came Malcom McLean who realised that the whole process could be made an order of magnitude more efficient if the cargo were stored in containers that could be moved from ship to truck to train. But he wasn't the only one. The movement towards containerisation was already happening independently around the world. But everyone was using different sized containers with different kinds of fittings. If this continued, the result would be a tower of Babel instead of smoothly running global logistics.

Slide 34

Malcolm McLean and his engineer Keith Tantlinger designed two crate sizes—20ft and 40ft—that would work for ships, trucks, and trains. Their design also incorporated an ingenious twistlock mechanism to secure containers together. But the extra step that would ensure that their design would win out was this: Tantlinger convinced McLean to give up the patent rights. This wasn't done out of any hippy-dippy ideology. These were hard-nosed businessmen.

Slide 35

But they understood that a rising tide raises all boats, and they wanted all boats to be carrying the same kind of containers. Without the threat of a patent lurking beneath the surface, ready to torpedo the potential benefits, the intermodal container went on to change the world economy. (The world economy is very large and intangible.)

Slide 36

The World Wide Web also ended up changing the world economy, and much more besides. And like the intermodal container, the World Wide Web is patent-free. Again, this was a pragmatic choice to help foster adoption. When Tim Berners-Lee and his colleague Robert Cailleau were trying to get people to use their World Wide Web project they faced some stiff competition. Lots of people were already using Gopher. Anyone remember Gopher?

Slide 37

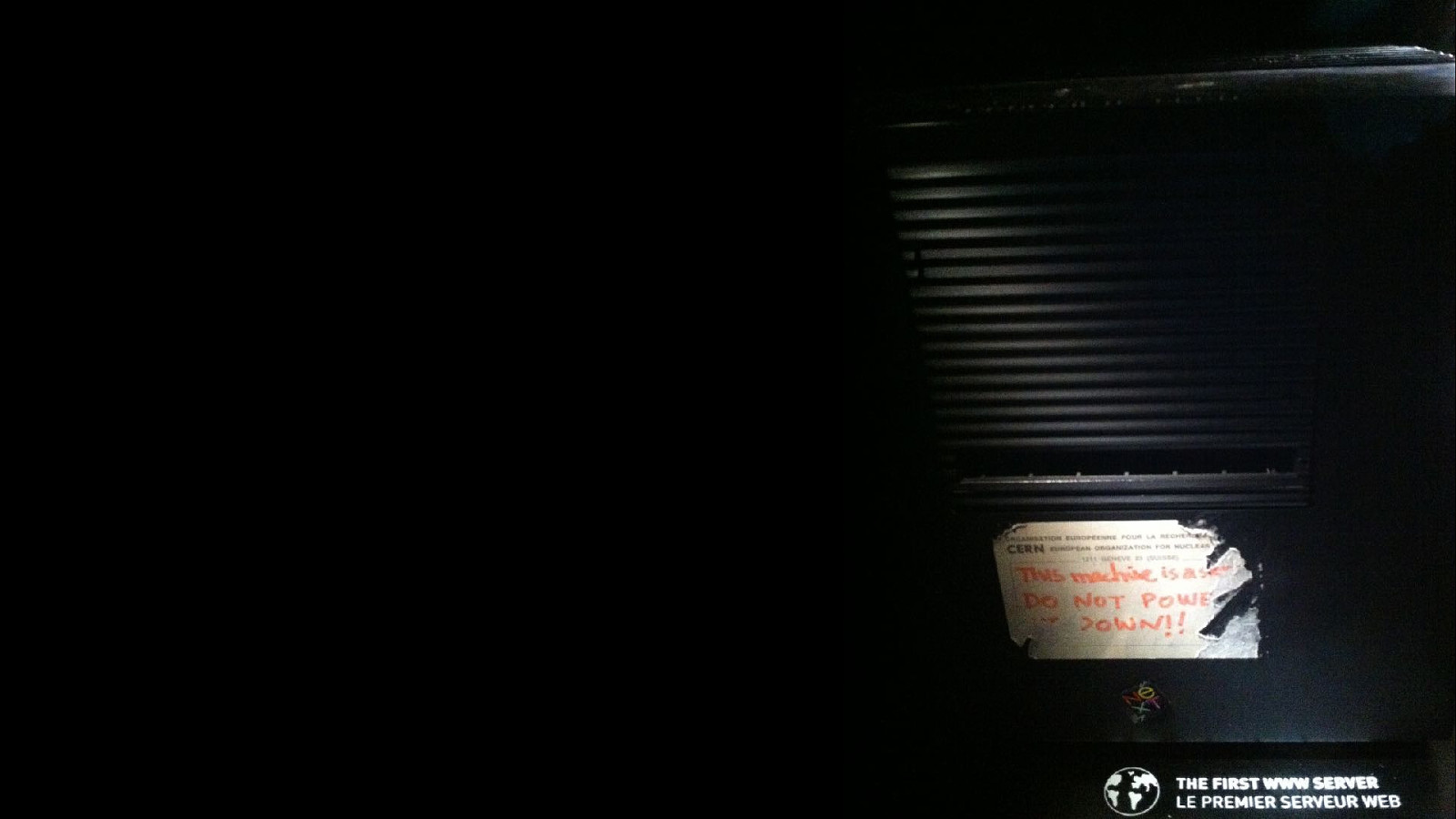

The seemingly unstoppable growth of the Gopher protocol was somewhat hobbled in the early '90s when the University of Minnesota announced that it was going to start charging fees for using it. This was a cautionary lesson for Berners-Lee and Cailleau. They wanted to make sure that CERN didn't make the same mistake.

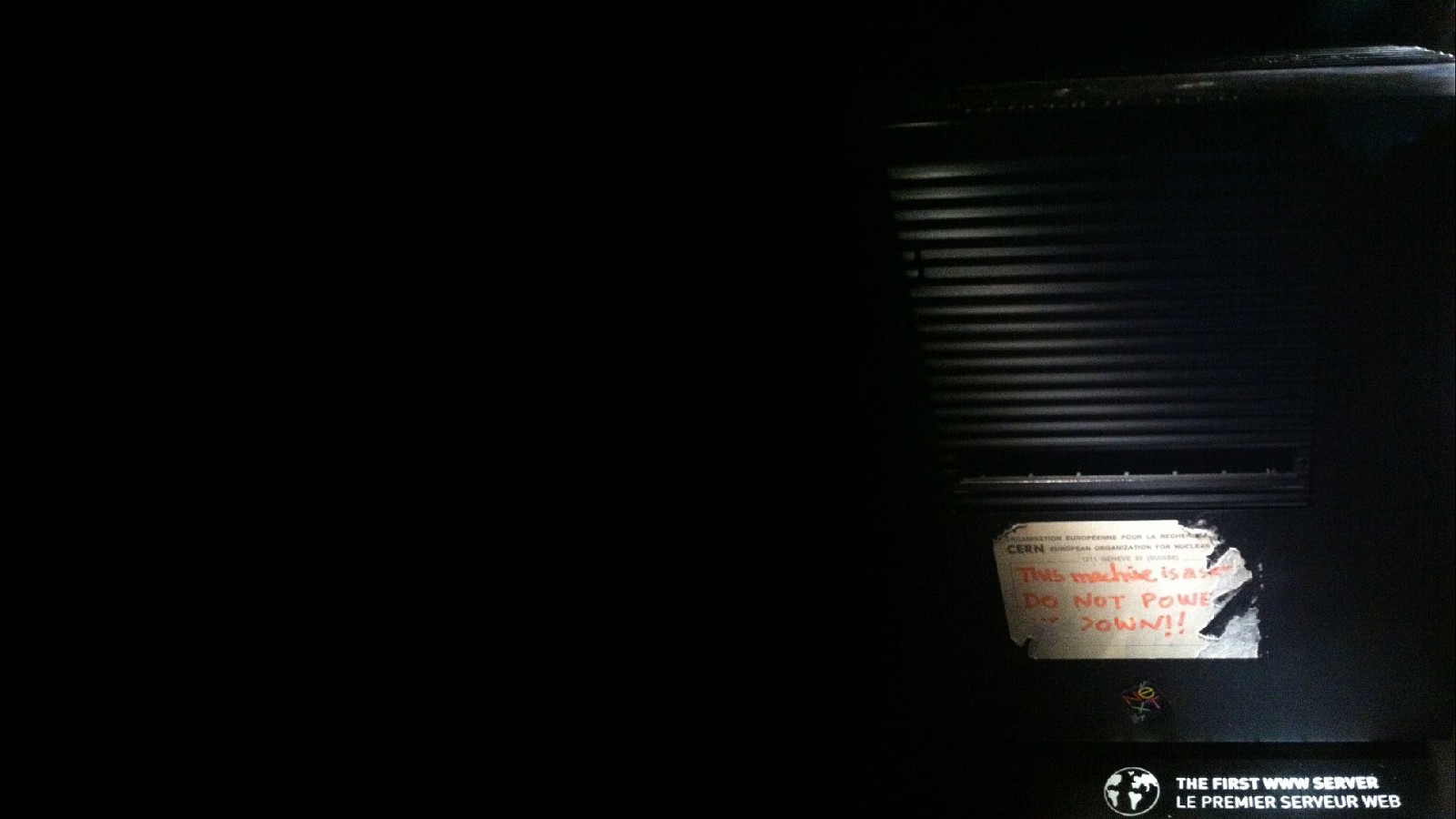

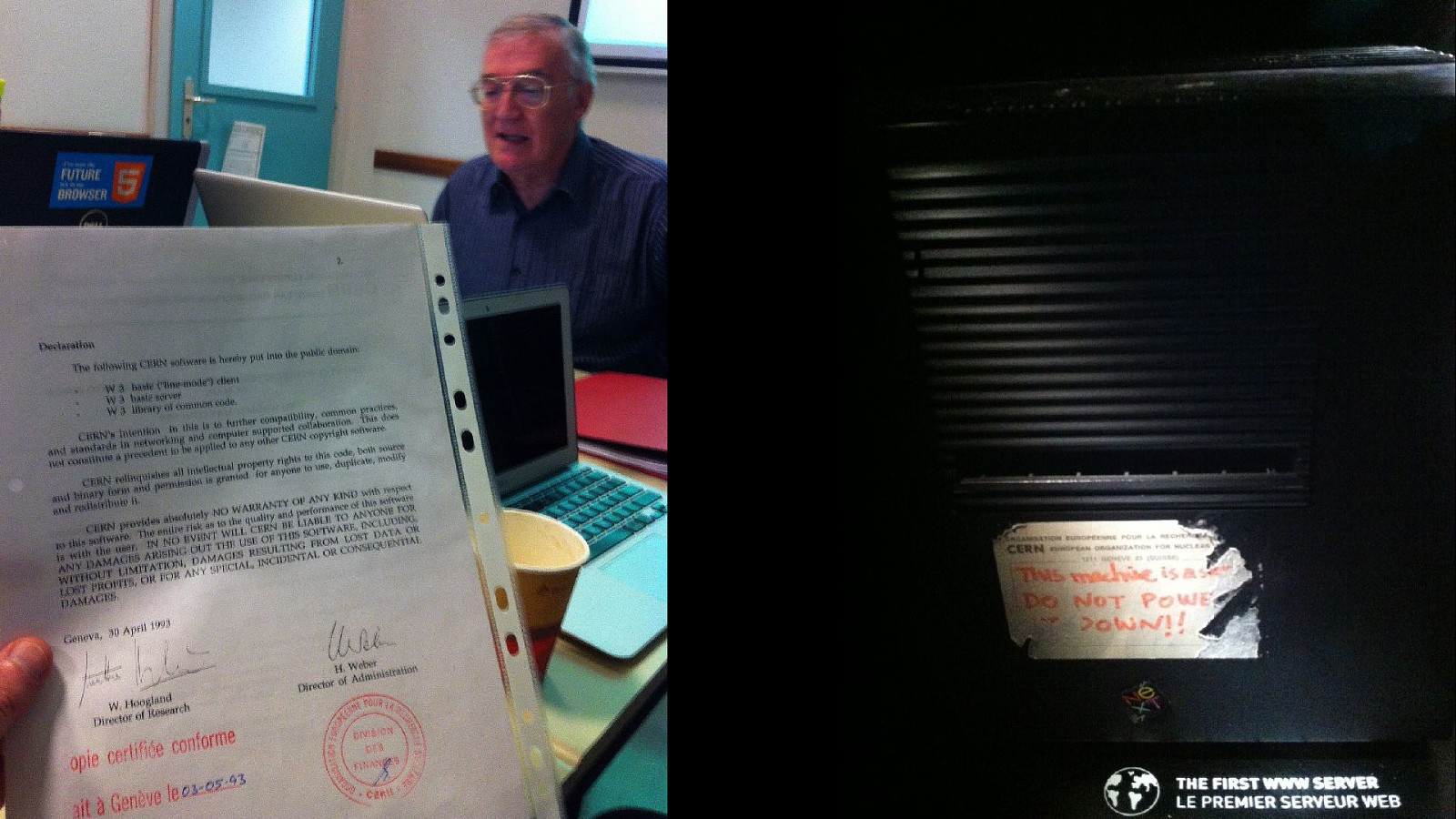

Slide 38

On April 30th, 1993, the code for the World Wide Project was made freely available. This is for everyone.

Slide 39

If you're trying to get people to adopt a standard or use a new hypertext system, the biggest obstacle you're going to face is inertia.

Slide 40

As the brilliant computer scientist Grace Hopper used to say: > The most dangerous phrase in the English language is "We've always done it this way." Rear Admiral Grace Hopper waged war on business as usual. She was well aware how arbitrary business as usual is. Business as usual is simply the current state of our consensus reality. She said:

Slide 41

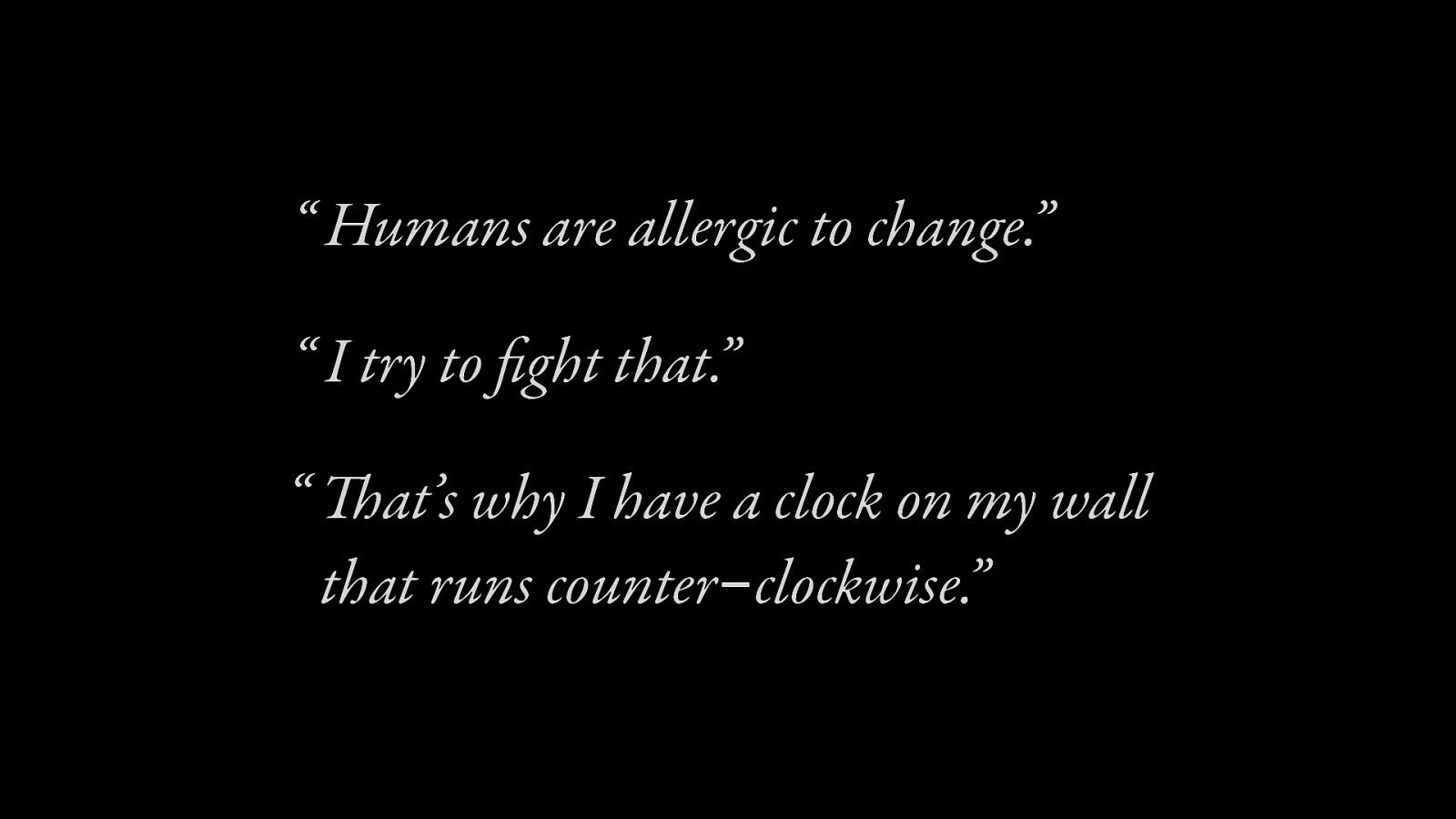

“Humans are allergic to change.” “I try to fight that.” “That’s why I have a clock on my wall that runs counter-clockwise.”

Slide 42

Our clocks are a perfect example of a ubiquitous but arbitrary convention. Why should clocks run clockwise rather than counter-clockwise?

Slide 43

One neat explanation is that clocks are mimicing the movement of a shadow across the face of a sundial ...in the Northern hemisphere. Had clocks been invented in the Southern hemisphere, they would indeed run counter-clockwise.

Slide 44

But on the clock face itself, why do we carve up time into 24 hours?

Slide 45

Why are there 60 minutes in an hour?

Slide 46

Why are there are 60 seconds in a minute?

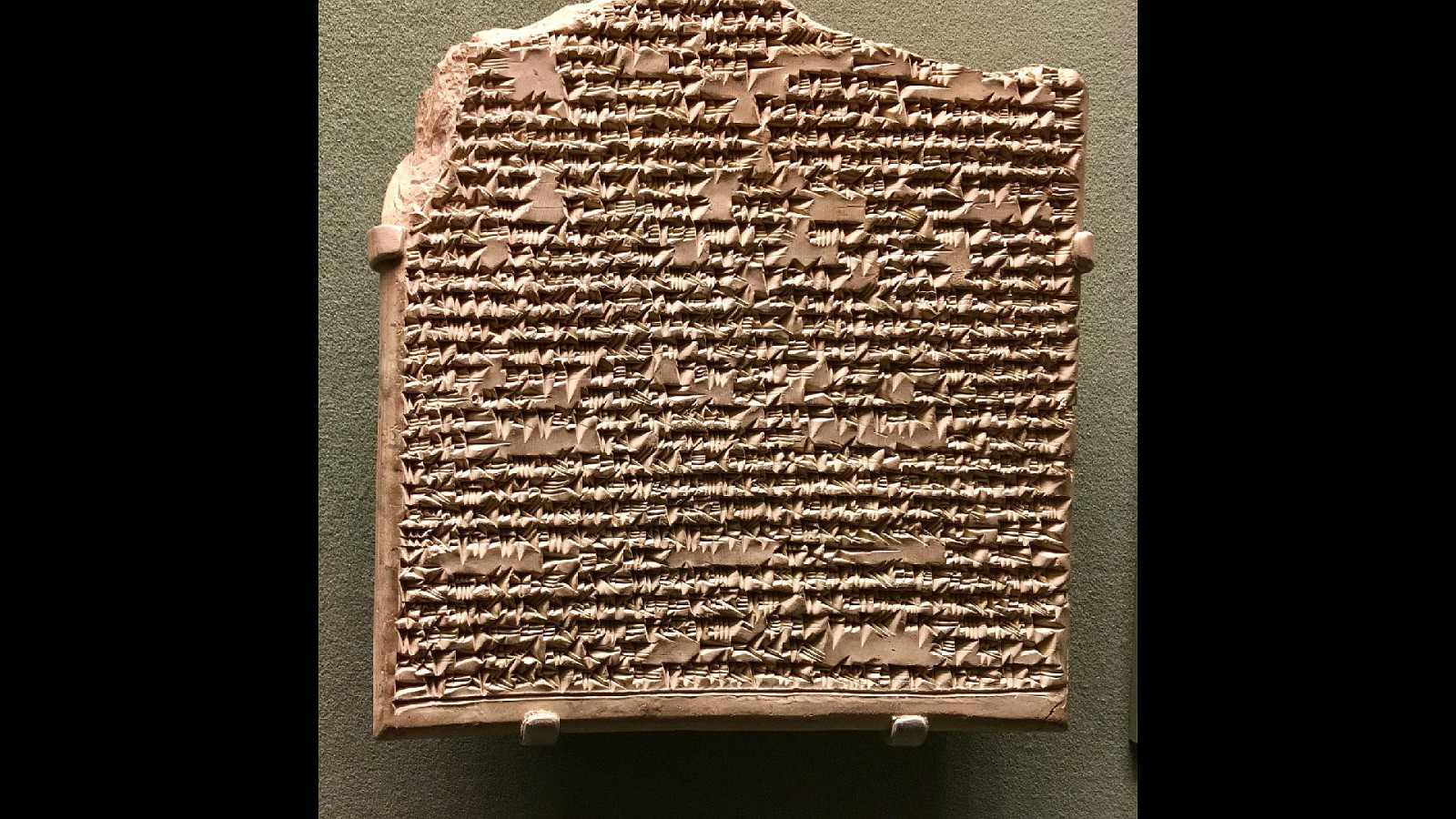

Slide 47

It probably all goes back to Babylonian accountants. Early cuneiform tablets show that they used a sexagecimal system for counting —that's because 60 is the lowest number that can be divided evenly by 6, 5, 4, 3, 2, and 1. But we don't count in base 60; we count in base 10. That in itself is arbitrary—we just happen to have a total of ten digits on our hands. So if the sexagesimal system of telling time is an accident of accounting, and base ten is more widespread, why don't we switch to a decimal timekeeping system?

Slide 48

It has been tried. The French revolution introduced not just a new decimal calendar—much neater than our base 12 calendar—but also decimal time.

Slide 49

Each day had ten hours.

Slide 50

Each hour had 100 minutes.

Slide 51

Each minute had 100 seconds. So much better! It didn't take. Humans are allergic to change. Sexagesimal time may be arbitrary and messy but ...we've always done it this way.

Slide 52

Incidentally, this is also why I'm not holding my breath in anticipation of the USA ever switching to the metric system. Instead of trying to completely change people's behaviour, you're likely to have more success by incrementally and subtly altering what people are used to. That was certainly the case with the World Wide Web.

Slide 53

The Hypertext Transfer Protocol sits on top of the existing TCP/IP stack.

Slide 54

The key building block of the web is the URL.

Slide 55

But instead of creating an entirely new addressing scheme, the web uses the existing Domain Name System. Then there's the lingua franca of the World Wide Web...

Slide 56

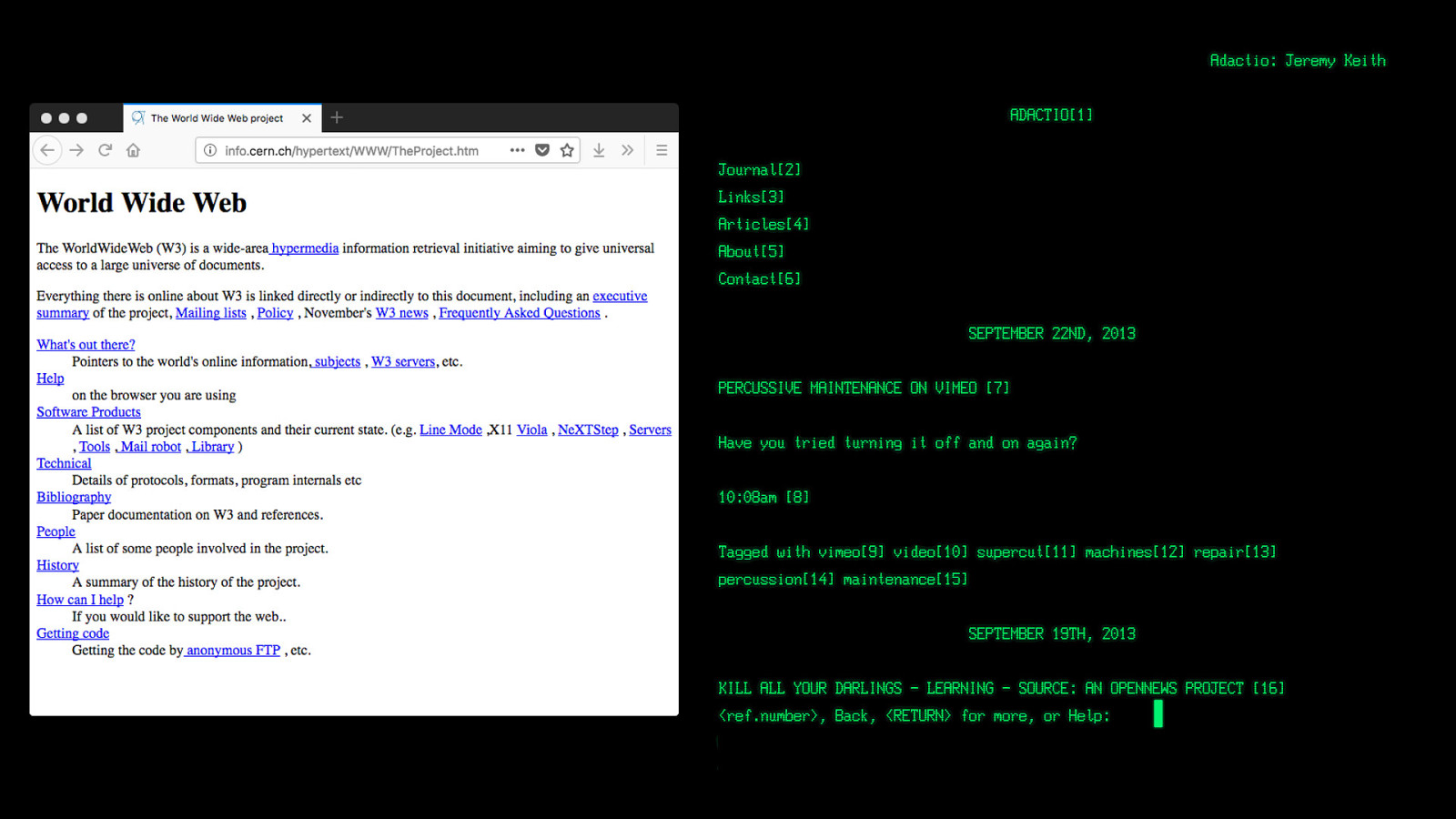

These elements probably look familiar to you. You recognise this language, right? That's right—

Slide 57

...it's SGML. Standard Generalised Markup Language. Specifically, it's CERN SGML—a flavour of SGML that was already popular at CERN when Tim Berners-Lee was working on the World Wide Project. He used this vocabulary as the basis for the HyperText Markup Language.

Slide 58

Because this vocabulary was already familiar to people at CERN, convincing them to use HTML wasn't too much of a hard sell. They could take an existing SGML document, change the file extension to .htm and it would work in one of those new fangled web browsers. In fact, HTML worked better than expected. The initial idea was that HTML pages would be little more than indices that pointed to other files containing the real meat and potatoes of content—spreadsheets, word processing documents, whatever. But to everyone's surprise, people started writing and publishing content in HTML.

Slide 59

Was HTML the best format? Far from it. But it was just good enough and easy enough to get the job done. It has since changed, but that change has happened according to another design principle:

Slide 60

Evolution, not revolution —HTML Design Principles

From its humble beginnings with the handful of elements borrowed from CERN SGML, HTML has grown to encompass an additional 100 elements over its lifespan. And yet, it's still technically the same format! This is a classic example of the paradox called the Ship Of Theseus, also known as...

Slide 61

Trigger's Broom.

Slide 62

You can take an HTML document written over two decades ago, and open it in a browser today.

Slide 63

Even more astonishing, you can take an HTML document written today and open it in a browser from two decades ago. That's because the error-handling model of HTML has always been to simply ignore any tags it doesn't recognise and render the content inside them. That pattern of behaviour is a direct result of the design principle:

Slide 64

Degrade gracefully “…document conformance requirements should be designed so that Web content can degrade gracefully in older or less capable user agents, even when making use of new elements, attributes, APIs and content models.” —HTML Design Principles

Slide 65

Here's a picture from 2006.

Slide 66

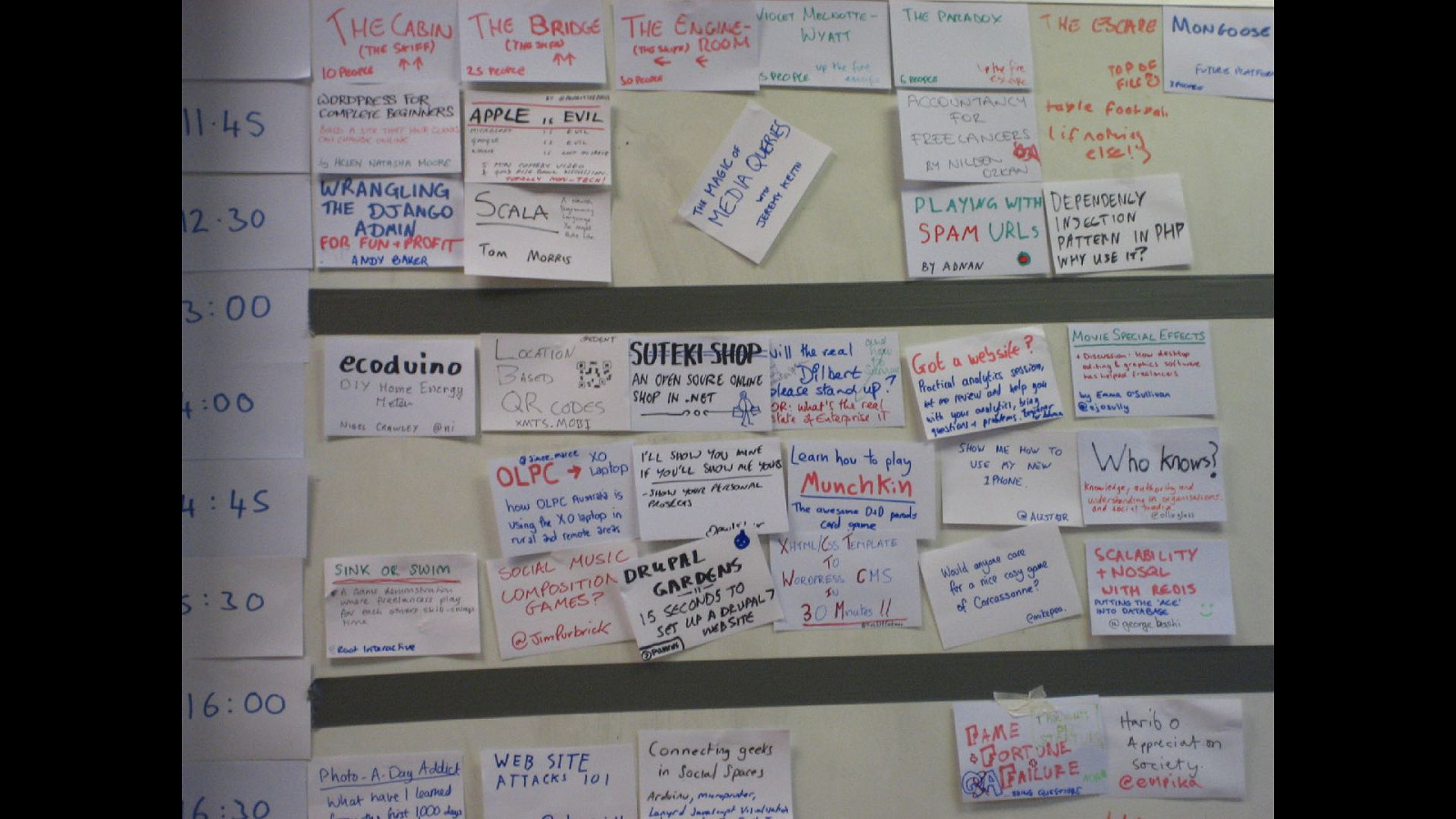

That's me in the cowboy hat—the picture was taken in Austin, Texas. This is an impromptu gathering of people involved in the microformats community. Microformats, like any other standards, are sets of agreements. In this case, they're agreements on which class values to use to mark up some of the missing elements from HTML—people, places, and events. That's pretty much it. And yes, they do have design principles—some very good ones—but that's not why I'm showing this picture. Some of the people in this picture—Tantek Çelik, Ryan King, and Chris Messina—were involved in the creation of BarCamp, a series of grassroots geek gatherings.

Slide 67

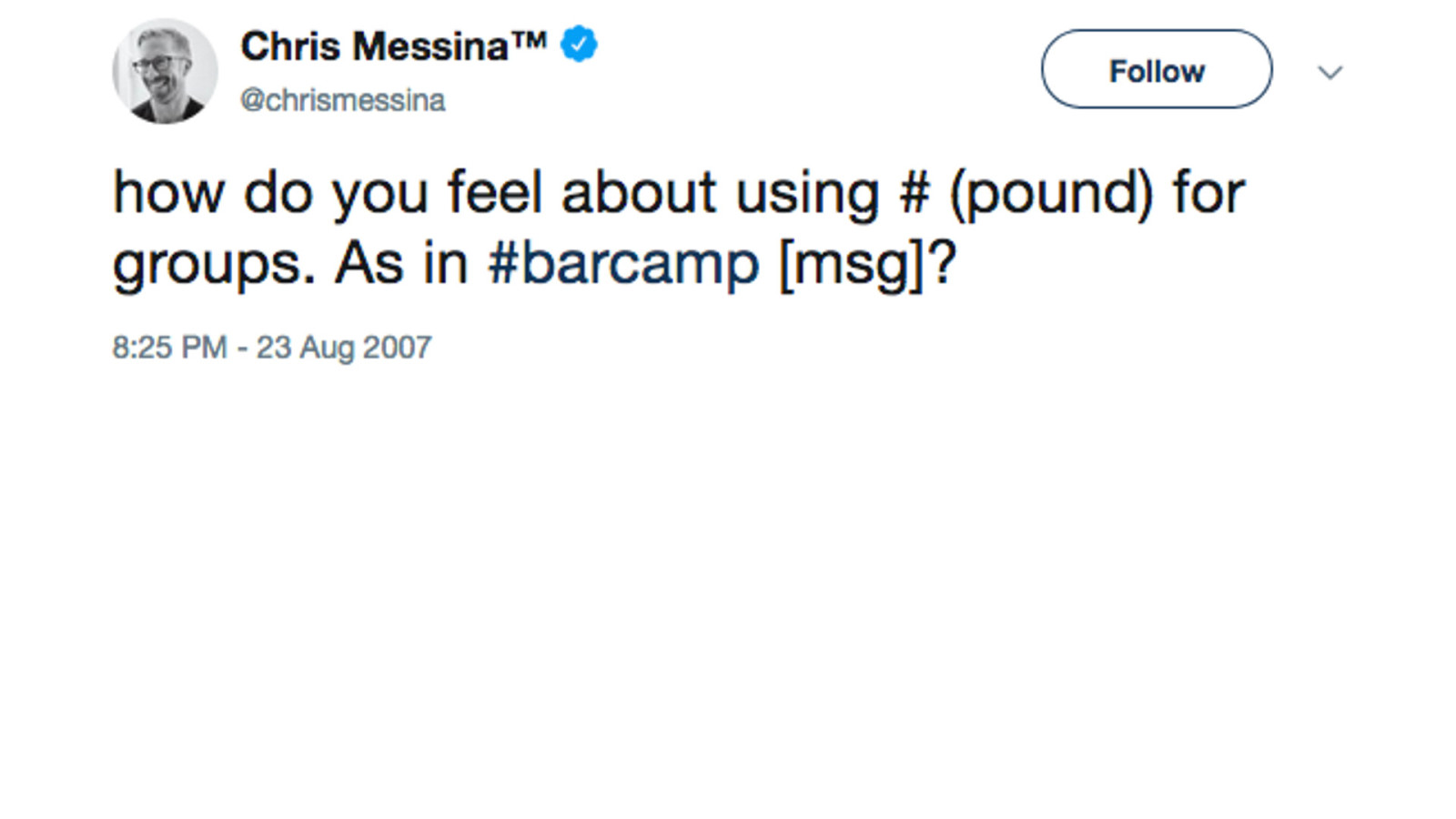

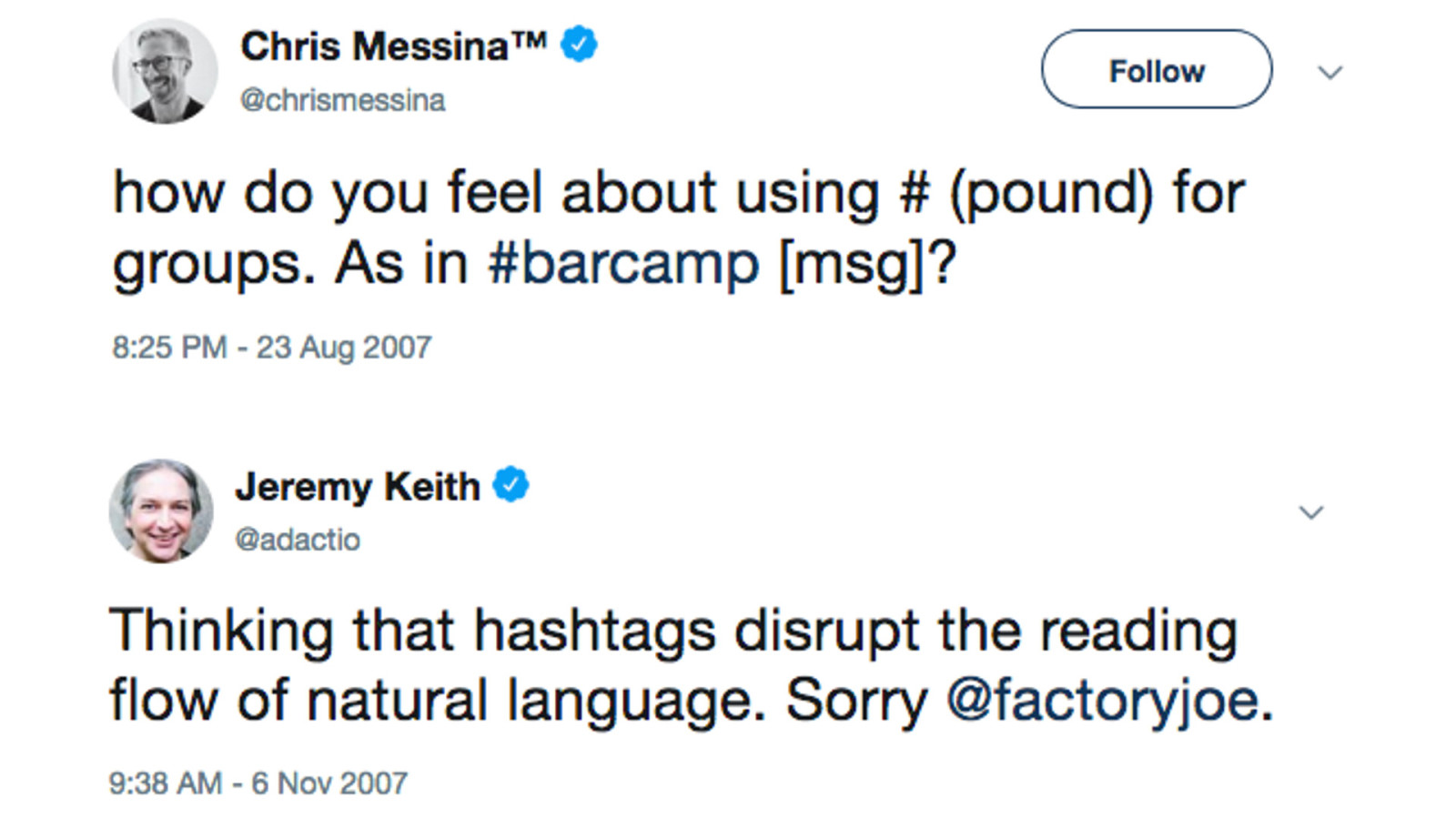

BarCamps sound like they shouldn't work, but they do. The schedule for the event is arrived at collectively at the beginning of the gathering. It's kind of amazing how the agreement emerges—rough consensus and running events. In the run-up to a BarCamp in 2007, Chris Messina posted this to the fledgeling social networking site, twitter.com:

Slide 68

how do you feel about using # (pound) for groups. As in #barcamp This was when tagging was all the rage. We were all about folksonomies back then. Chris proposed that we would call this a "hashtag".

Slide 69

I wasn't a fan. But it didn't matter what I thought. People agreed to this convention, and after a while Twitter began turning the hashtagged words into links. In doing so, they were following another HTML design principle:

Slide 70

Pave the cowpaths

It sounds like advice for agrarian architects, but its meaning is clarified:

“When a practice is already widespread among authors, consider adopting it rather than forbidding it or inventing something new.”

Slide 71

Twitter had previously paved a cowpath when people started prefacing usernames with the @ symbol. That convention didn't come from Twitter, but they didn't try to stop it. They rolled with it, and turned any username prefaced with an @ symbol into a link. The @ symbol made sense because people were used to using it from email. The choice to use that symbol in email addresses was made by Ray Tomlinson. He needed a symbol to separate the person and the domain, looked down at his keyboard, saw the @ symbol, and thought "that'll do."

Slide 72

Perhaps Chris followed a similar process when he proposed the symbol for the hashtag. It could have just as easily been called a "number tag" or "octothorpe tag" or "pound tag". This symbol started life as a shortcut for "pound", or more specifically "libra pondo", meaning a pound in weight.

Slide 73

lb Libra pondo was abbreviated to lb when written.

Slide 74

That got turned into a ligature ℔ when written hastily. That shape was the common ancestor of two symbols we use today: £ and #.

Slide 75

The eight-pointed symbol was (perhaps jokingly) renamed the octothorpe in the 1960s when it was added to telephone keypads. It's still there on the digital keypad of your mobile phone. If you were to ask someone born in this millennium what that key is called, they would probably tell you it's the hashtag key.

Slide 76

And if they're learning to read sheet music, I've heard tell that they refer to the sharp notes as hashtag notes. If this upsets you, you might be the kind of person who rages at the word "literally" being used to mean "figuratively" or supermarkets with aisles for "10 items or less" instead of "10 items or fewer".

Slide 77

Tough luck. The English language is agreement. That's why English dictionaries exist not to dictate usage of the language, but to document usage.

Slide 78

It's much the same with web standards bodies. They don't carve the standards into tablets of stone and then come down the mountain to distribute them amongst the browsers. No, it's what the browsers implement that gets carved in stone. That's why it's so important that browsers are in agreement. In the bad old days of the browser wars of the late 90s, we saw what happened when browsers implemented their own proprietary features.

Slide 79

Standards require interoperability. Interoperability requires agreement.

Slide 80

So what we can learn from the history of standardisation?

Slide 81

Well, there are some direct lessons from the HTML design principles.

Slide 82

The priority of constituencies

Listen, I want developer convenience as much as the next developer. But never at the expense of user needs. I've often said that if I have the choice between making something my problem, and making it the user's problem, I'll make it my problem every time. That's the job. I worry that these days developer convenience is sometimes prized more highly than user needs. I think we could all use a priority of constituencies on every project we work on, and I would hope that we would prioritise users over authors.

Slide 83

Degrade gracefully.

I know that I go on about progressive enhancement a lot. Sometimes I make it sound like a silver bullet. Well, it kinda is. I mean, you can't just buy a bullet made of silver—you have to make it yourself. If you're not used to crafting bullets from silver, it will take some getting used to. Again, if developer convenience is your priority, silver bullets are hard to justify. But if you're prioritising users over authors, progressive enhancement is the logical methodology to use.

Slide 84

Evolution, not revolution…

It's a testament to the power and flexibility of the web that we don't have to build with progressive enhancement. We don't have to build with a separation of concerns like structure, presentation, and behaviour. We don't have to use what the browser gives us: buttons, dropdowns, hyperlinks. If we want to, we can make these things from scratch using JavaScript, divs and ARIA attributes. But why do that? Is it because those native buttons and dropdowns might be inconsistent from browser to browser.

Slide 85

Consistency is not the purpose of the world wide web.

Slide 86

Universality is the key principle underlying the web.

Slide 87

Our patterns should reflect the intent of the medium. Use what the browser gives you—build on top of those agreements.

Slide 88

Because that's the bigger lesson to be learned from the history of web standards, clocks, containers, and hashtags.

Slide 89

Our world is made up of incremental improvements to what has come before.

Slide 90

And that's how we will push forward to a better tomorrow: By building on top of what we already have instead of trying to create something entirely from scratch. And by working together to get agreement instead of going it alone.

Slide 91

The future can be a frightening prospect, and I often get people asking me for advice on how they should prepare for the web's future. Usually they're thinking about which programming language or framework or library they should be investing their time in. But these specific patterns matter much less than the broader principles of working together, collaborating and coming to agreement. It's kind of insulting that we refer to these as "soft skills"—they couldn't be more important.

Slide 92

Working on the web, it's easy to get downhearted by the seemingly ephemeral nature of what we build. None of it is "real"; none of it is tangible. And yet, looking at the history of civilisation, it's the intangibles that survive: ideas, philosophies, cultures and concepts. The future can be frightening because it is intangible and unknown. But like all the intangible pieces of our consensus reality, the future is something we collectively construct ...through agreement.

Slide 93

Now let's agree to go forward together to build the future web!